A/B Testing

Problem Overview

Tailoring an application can increase preferred user behavior

Companies face the ongoing challenge of presenting their users with an application that is most effective in achieving a desired outcome. This outcome can be anything from increasing sales, improving click-through rates, or increasing the amount of user engagement with the application. The desired outcome is often reported as a conversion rate, which is a metric used to measure the percentage of website visitors or users who take a desired action, such as making a purchase, filling out a form, or signing up for a newsletter.

There are a number of approaches that companies can take when trying to optimize their application to increase their conversion rate:

Surveys: Surveys can be used to collect feedback from users about their experience with a website or campaign. This feedback can be used to identify areas of improvement and make changes to the design or content.

User testing: User testing involves recruiting users to test a website or campaign and provide feedback on their experience. This feedback can be used to identify usability issues and make improvements.

Expert reviews: Expert reviews involve having a group of experts evaluate a website or campaign based on best practices and their own expertise. This can be useful in identifying areas of improvement and making changes to the design or content.

Analytics: Analytics tools can be used to track user behavior and identify areas of the website or campaign that are performing well or poorly. This data can be used to make data-driven decisions and optimize the design or content.

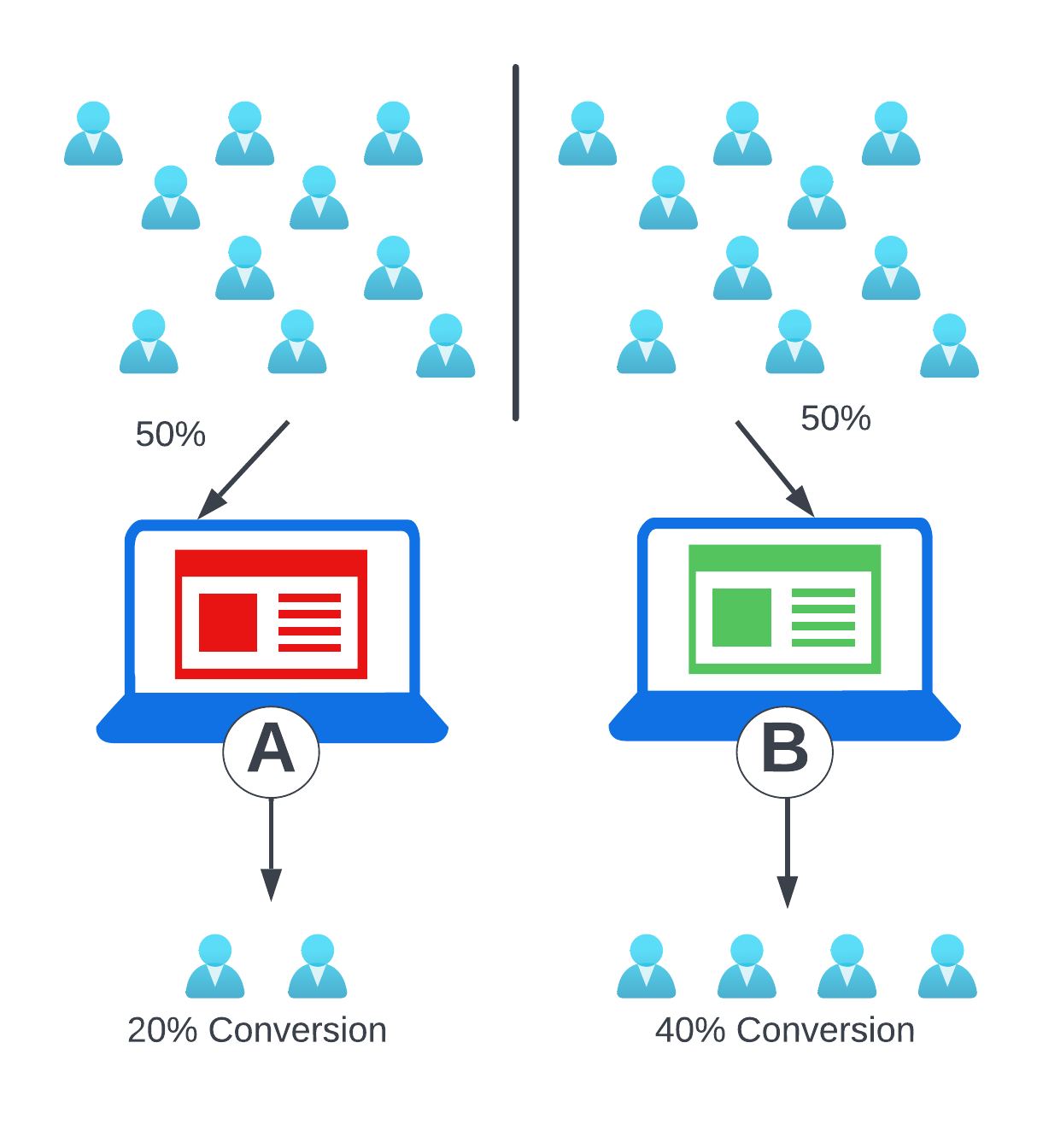

A/B testing: As we will discuss more below, A/B testing involves randomly dividing a sample population into two or more groups and exposing each group to a different version of the website or campaign. The results are then analyzed to determine which version performed better in achieving the desired outcome. The goal is to identify which version has a higher conversion rate.

Hypotheses, experiments, and results

A/B testing (also called split testing) is a tool that is used to compare the way users engage with two or more distinct versions of a website. Those versions may have visible differences in the user interface, or there may be differences in hardware, software, or APIs that may not be visible to the user but could otherwise affect the user experience.

Prior to conducting an A/B test, a company will create a hypothesis about how a modification is expected to impact a specific user behavior. Next, a variant of the original site is created that features the proposed change, and an A/B test tool is used to direct a certain percentage of users to the variant and a certain percentage to the control.

Once users are assigned to a test variant, event data related to the desired user behavior can be gathered and analyzed from users in each group. The user behavior from each version is compared to determine whether the hypothesis was accurate. By enabling distinct groups of users to interact with each version and using data analysis to compare their responses with the proposed goal, companies can make an informed decision as to whether to roll out the change to the whole user base.

A/B testing is an attractive solution for optimizing conversion rate

A/B testing is a widely used and effective method for increasing conversion rates for several reasons:

Objective results: A/B testing provides objective results that are based on data and statistical analysis, rather than subjective opinions or assumptions. This means that decisions can be made based on evidence and facts rather than guesswork.

User behavior insights: A/B testing provides quantitative insights into user behavior and preferences that can be used to optimize the user experience and improve conversion rates. This data can be used to make informed decisions about changes to the website or app that are most likely to improve user engagement and drive conversions.

Cost-effective: A/B testing can be a cost-effective way to optimize a website or app, as it can identify the changes that are most likely to have a positive impact on conversion rates, without the need for a complete redesign or overhaul.

Continuous improvement: A/B testing allows for continuous improvement over time, as new ideas and changes can be tested and implemented based on the results of previous tests. This can lead to significant gains in conversion rates and revenue over time.

Not all problems are amenable to A/B Testing

While A/B testing has many advantages compared to other approaches for optimizing applications, it is important to note that there are some problems that A/B testing may not solve on its own:

Fundamental design flaws: A/B testing can only optimize the performance of existing designs or features, but it cannot fix fundamental flaws in the design or architecture of a website or application.

Lack of traffic: A/B testing requires a certain amount of traffic to generate statistically significant results. If the sample size is too small, the results of the test may not be reliable or meaningful. For example, if only a few visitors are exposed to each version of the test, it is possible that the results could be due to chance rather than a real difference in performance. Therefore, if a website or application does not have enough traffic, A/B testing may not be effective.

Limited scope: A/B testing can only test one or a few elements at a time, and may not capture the full range of user behavior or preferences.

Limited insights: While A/B testing can provide insights into user behavior and preferences, it may not provide deeper insights into the underlying reasons for user behavior, which may require additional research and analysis.

Short-term focus: A/B testing typically focuses on short-term gains and may not address long-term goals or sustainability.

While A/B testing is well-suited for guiding evidence-based decisions regarding application components and features, it should be used in conjunction with other research methods and should not be relied on as the sole solution to all optimization problems.

Despite some shortcomings, A/B testing is a powerful tool for optimizing websites and applications, improving user engagement, and driving conversions. By systematically testing different versions of a website or application (also known as variants) and analyzing the results, businesses can make data-driven decisions that lead to better user experiences and improved conversion metrics.